Saturday Links: Icebergs, World Models, and Regulating Emotional Interactions

Job loss studies continue to mount up, regulations for chatbot interactions, world models, and the shape of the AI frontier.

I hope your holiday break is going wonderfully! To keep you up to date with the news, this week, the world received gifts in the form of microscopic robots, NVIDIA dropping new powerful small models, and then also buying itself a gift by acquiring (though not officially "acquiring") chipmaker Groq for $20B. In the US, the week also brought a clampdown on new toys, with the ban on DJI drones and equipment implicitly entering into force.

On to the longer stories:

- MIT's Project Iceberg simulates the US Economy and predicts that 11% of all US jobs are already automatable by AI. (The actual study is here.) In this study, MIT researchers modelled US occupations in a simulation and traced their interactions. The headline conclusion that up to 11% of US jobs are already automatable has been quite widely circulated. This doesn't mean they will be (or quickly), so the short-run implications are not that severe. Realistically, though, if the results are correct (and I actually wonder if they are an under-estimate), the impact on the job market over the next 5-10 years is going to be severe. The %age number will surely grow, and deployment will accelerate. This still doesn't mean the people impacted won't be able to move to new roles, but a vision for what those roles are is increasingly needed.

- The Man Who Can't Be Moved. A nice article summarizing Yann LeCun's view that world models are still the most critical frontier in AI research. (For the record, LeCun responded that he can be moved.) LeCun recently left Meta to start a new AI lab, so hopefully we'll see his ideas take flight there. I agree with his central point that LLMs are not enough on their own, and we need other structures (or at least other layers of abstraction within LLMs) if we're going to achieve the consistency and predictive power needed for real-world actions.

- Safety Alignment of LMs via Non-cooperative Games. This paper by researchers at Meta's FAIR lab uses pairs of agents that compete in an attacker-defender cycle to align the target agent. Alignment, in this case, means resistance to prompts that elicit dangerous responses or actions. This co-evolution makes a lot of sense and is likely a lot better than static methods. It still suffers from the problem of how to bootstrap the attacker agent sufficiently to get good coverage of the space of possible attacks.

- China issues draft rules to regulate AI with human-like interaction. There have been long-running concerns about the impact of Human-AI interactions in counselling and even just informal emotional relationships (including conversations potentially influencing people in ways that helped precipitate suicide). This seems to be the first time that a regulator is stepping in to try to set rules for how such systems should behave. This is a really difficult area to regulate. On the one hand, almost all chatbots form a minor bond with users; on the other, this is the first time we've had such an anthropomorphised form of technology. There is now a huge range of chatbots from purely work-focused to general entertainment to sex-coversation bots. Part of the challenge will be determining when the rules apply and fighting the inevitable rush to line up with loopholes.

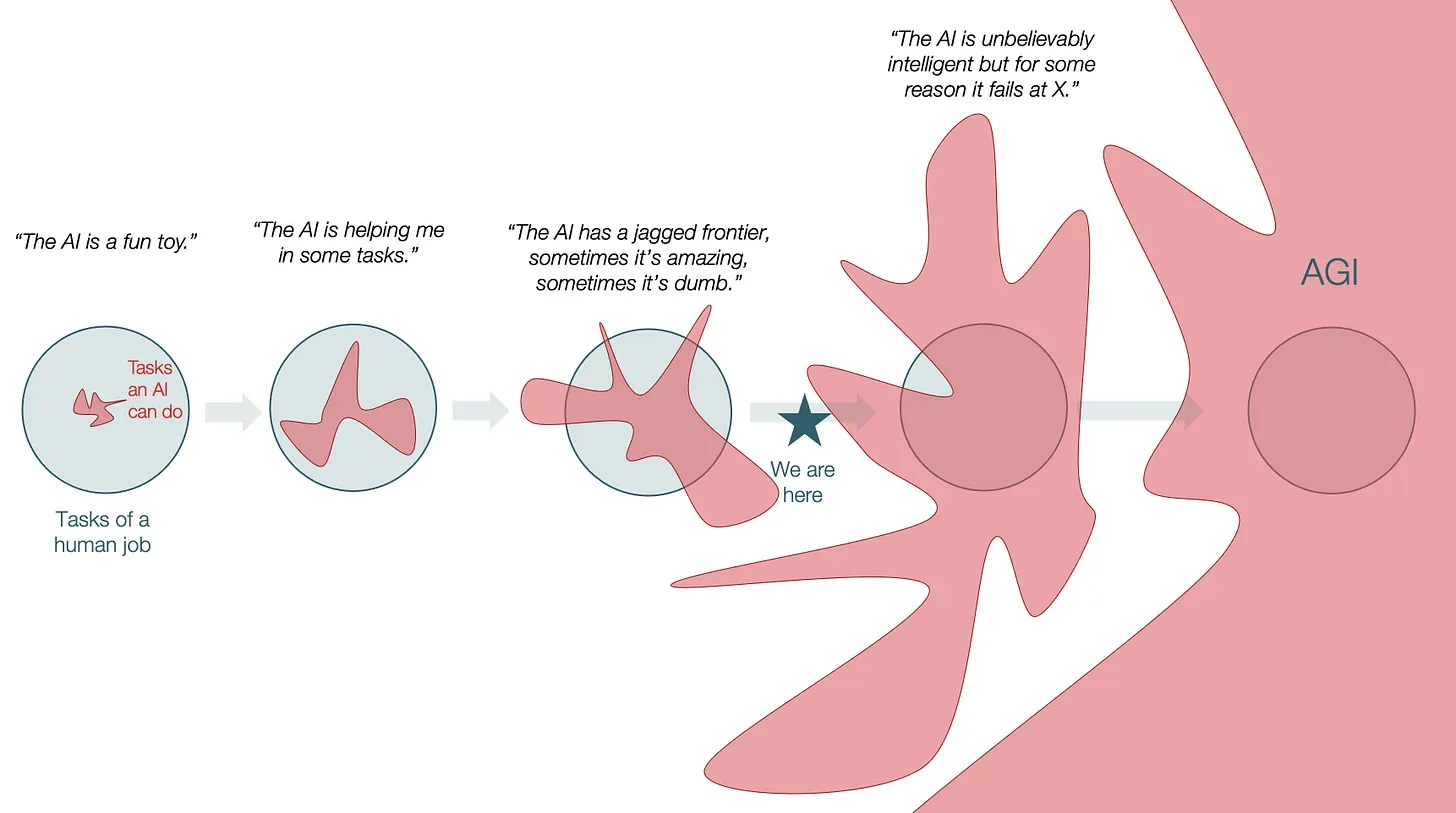

- The Shape of AI: Jaggedness, Bottlenecks and Salients. Ethan Mollick has a nice post summing up the general level of progress in AI and how he expects it to continue to be "Jagged" (amazing at times, terrible at others). There are lots of nice visuals in the post, but I particularly like this one from @TomasPueyo:

Realistically, we are probably still in that third stage of the Jagged frontier and will be for a while longer, but the "amazing" areas of performance do continue to add up.

Wishing you Happy Holidays.