Saturday Links: Memory, Closing Commerce Loops, and LEGO Building

Shopping via AI, Lego creation, and keeping LLMs safe (or not).

This week I was roasted in Austin, rained on in New York, and now I am enjoying warm sunshine in Lausanne (phew). Still, it was another exciting week in AI! Here are this week's links:

- When Will We Give AI True Memory? This is a thoughtful interview with the founder of Pinecone (a vector database often used to implement retrieval augmented generation for LLMs), covering memory for AI. There is quite a bit of nuance on how external memory works. However, I think what's really missing is that, as well as retrieving, storing, and determining the relevance of stored facts, LLMs, if they are to work well with memory, will have to continually rebalance and relearn, compacting those facts and weaving them into everything else. This will, by definition, be a lossy process (just like all LLM training). Human memory is frustrating because it is lossy, but it is also truly amazing in that it hits upon subtle connections that are never expressed in a storage query.

- ChatGPT + Shopify? The past couple of weeks have seen the emergence of a few clues and rumours about an integration between ChatGPT and Shopify. The article linked here points to code strings in OpenAI's public web code for Shopify checkout. Nothing has been officially announced, but any tie-up would be significant. Shopify is continuously trying to expand its buyer side reach (with the Shop App, for example), and it would give ChatGPT a monetization channel that isn't full advertising but could have significant volume. No doubt ChatGPT could do this for many different partners over time, the question will be how they match queries with affiliate links. Perplexity has also launched "Buy with Pro". All these moves are a clear sign that industry players expect chat AI bots to become a significant driver of purchase intent. It also suggests that the way to get exposure in a high-traffic LLM won't be via "AI SEO" but by partner/affiliate deals.

- Musk's xAI updates Grok chatbot after 'white genocide' comments. X.AI's Grok made the news this week by responding with controversial statements on South Africa's political/legal situation for white citizens. The company later said that an update had changed certain policies and had been rolled back. Much of the coverage has focused on the charged nature of the comments. No doubt something basic went off the rails here (some guardrails likely were disabled). However, this problem really is inherent to LLMs. No matter how many guardrails are in place, the system is a compacted, interwoven representation of much of the public internet. The model is also constantly being refined, quantized, and compacted. It is unsurprising that some queries will generate divisive results.

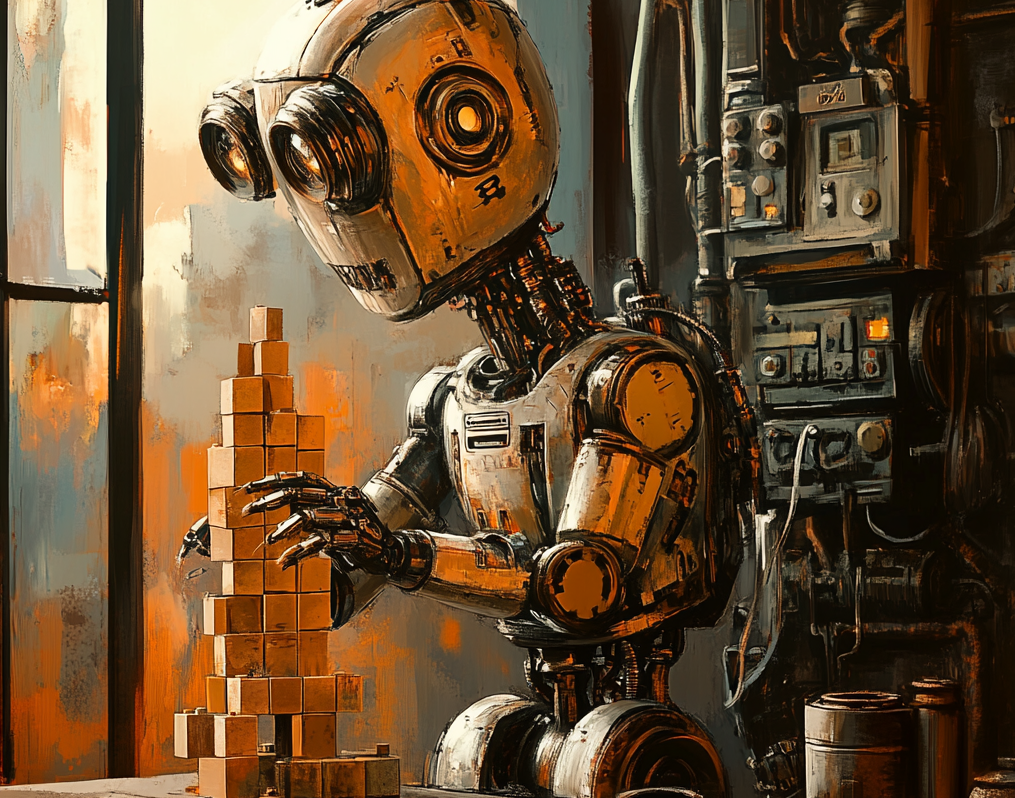

- Generating Physically Stable and Buildable LEGO® Designs from Text. Something more fun... a CMU has developed a custom LLM that uses next token prediction to build physically stable and buildable LEGO models. The training approach is to use a physics checker to reject infeasible combinations. The checker also seems to be used at inference time to make sure you never get an infeasible outcome. The models use simple blocks currently, but it's interesting to see. I suspect this approach might not scale particularly well as is, since introducing more pieces explodes the search space. What's good about the approach is using physics feedback in training. Presumably, this reinforces a more accurate perception in the model as to what does and does not work. Also, using a checker at runtime means the LLM can search for solutions. My guess is, though, that ultimately it will make sense to have a specialist planning system based on symbolic logic to build the actual models and use an LLM to prompt it (use it as a tool) and evaluate the results. There is also a demo to try. Write up Tom's Hardware.

- OpenAI launches Codex. This is a hosted version of OpenAI's command-line CLI tools on OpenAI's own cloud. Effectively, Agents can work on your codebase independently, similar to the way something like Devin works. This comes on the heels of OpenAI's Windsurf AI-Code IDE acquisition, so OpenAI is clearly doubling down on coding use-cases. This seems logical on the surface since coding is one of the clearest AI use-cases. I wonder if they wouldn't be better letting a larger dev-tool ecosystem grow around them first, though. A forest of third-party tools that use-OpenAI is harder to compete with and yields many more apps that use OpenAI.

Wishing you a great weekend.