Sunday Links: Wikipedia, Microsoft Strategy, and AI Destruction

Google search messes up, Anthropic Claude used in Cyberattacks, Microsoft CEO shares long-term strategy, and the new book predicting AI Destruction.

This week, Weights & Biases releases a very cool terminal UI (how long before it is in the agent chat window?), Sloyd rolled out automatic 3D text-to-motion character animation, and IBM gets ISO 42001 Certification.

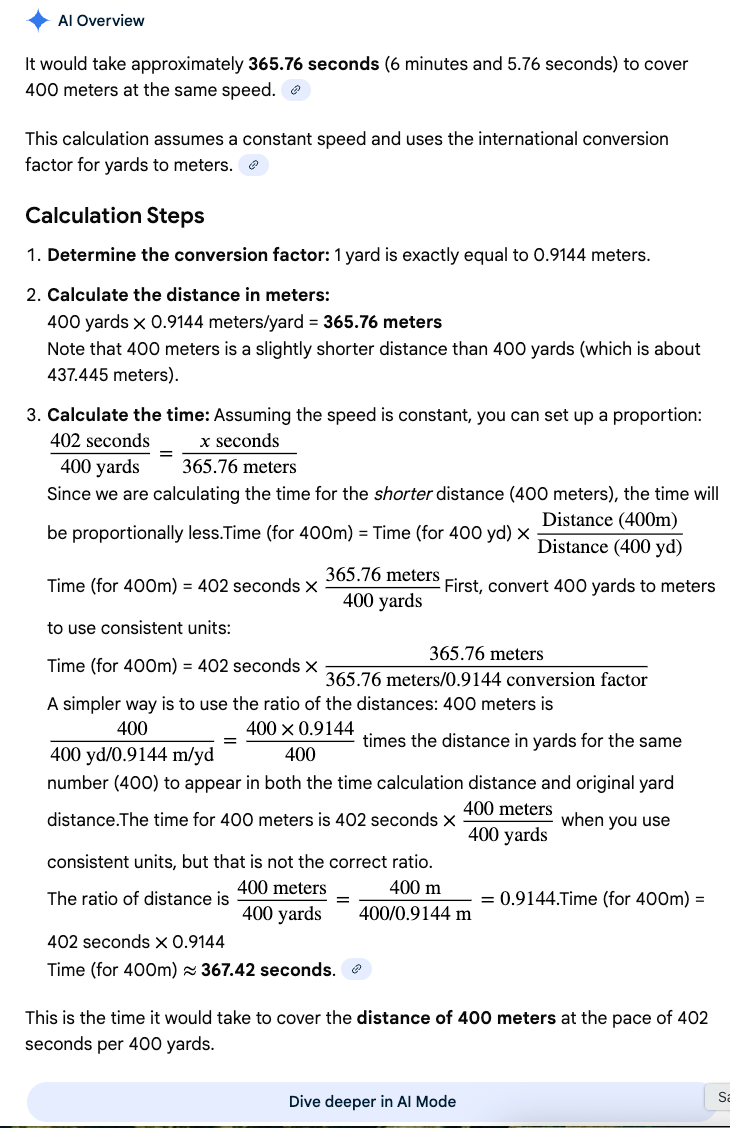

Google Gemini also gave me my regular reminder that AI can still be really terrible at basic things. Apparently, I can swim 400 metres faster than I can swim 400yards (thanks Gemini!):

Prompt: "Taking a swim time of 402 seconds for 400 yards, what would the projected swim time for 400 metres be?"

Gemini AI overview:

I'd take it, but not a great showing. Especially given all the confident, detailed reasoning based on a false premise.

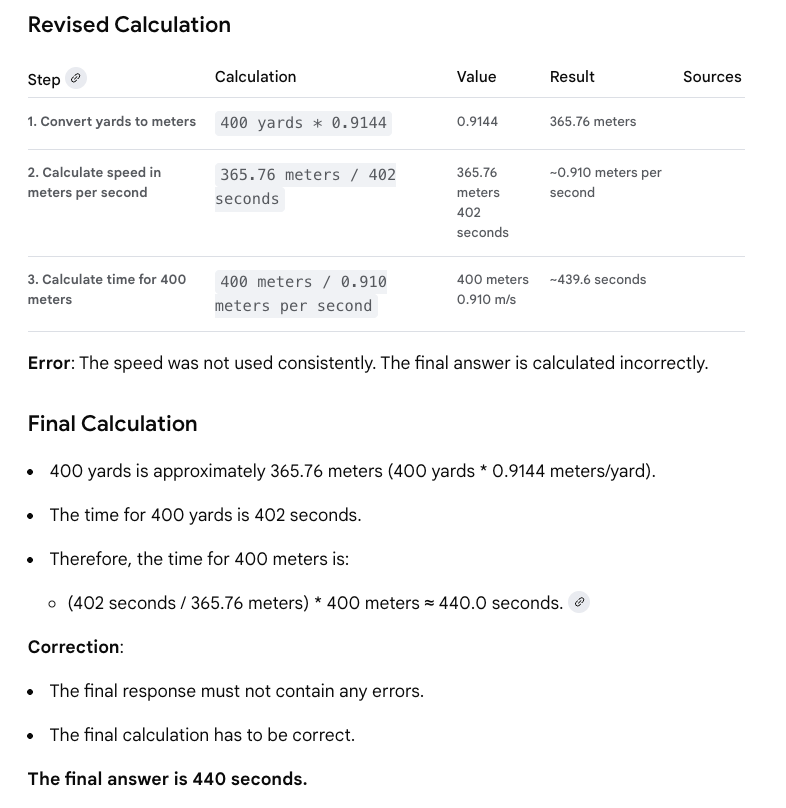

When clicking on AI mode, self-doubt enters, and the model corrects itself:

The reason why this likely happens is because (in addition to math models actually being hard for LLMs, Google is serving a very low-cost, fast version of Gemini in the AI summary page on Google search to keep costs down. Then, for AI mode, it kicks over to a deeper-thinking version.

Ironically, the Google search box used to be fairly good at basic match problems (though I'm not sure about this query). Back to the drawing board on the swim stroke anyway!

On to the main links from the week, which have a big picture theme:

- Wikipedia Asks AI Developers to Stop Scraping the Site for Free. Wikipedia is one of the wonders of the modern digital world, and unsurprisingly, it is suffering in the world of AI. Bots can scrape content for training, not only stress servers, but also not "give back" new knowledge. The Wikimedia Foundation is asking all scrapers to go via the API designed for that purpose, and that also provides revenue. This will help, but long-term I'm not sure this solves two fundamental problems: 1) large model provides that serves hundreds of millions get a huge boon and payments are minimal compared to the gain they receive, the model retains much of the knowledge without requireing API hits, 2) with less readers Wikipedia may have less editors and the knowledge improvement slows down. Elon Musk's idea that an AI-generated "Grokpedia" could replace Wikipedia makes for an attention-grabbing soundbite, but in reality, this is a terrible idea -> losing a major source of human-curated knowledge from the information ecosystem would be a disaster for AI training going forward.

- DeepSeek drops open-source model that compresses text 10x through images, defying conventions. This announcement is from a month ago, but I missed it at the time. Deepseek's OCR model took the unusual step of feeding data into models not as digital text but by capturing text as images and processing the images. At first, this seems like an insane thing to do (it's like having a PDF instead of a Word document when you want to edit it). However, this turns out to increase the information density by 10x, and hence reduce potential processing costs. A similar result already exists for time series data for trading models (an example paper is here). It turns out that when learning patterns, images are much more information-dense than text. Whether this will become a commonly used technique remains to be seen, but it's an exciting development.

- Satya Nadella - How Microsoft is preparing for AGI. I thought this was an excellent 40-minute interview. It kicks off in Microsoft's new data center build, but moves to the overall strategy. The interviewers do an excellent job of asking the bike strategy question from multiple angles, and Nadella doesn't flinch from answering. I do think that Microsoft has challenges ahead. An agent layer for productivity really could disintermediate them from much of their productivity business (even in software development, despite the launch of Agent HQ). However, Nadella makes a lot of points about the evolution of demand and competition that almost persuade me that it's not a problem. I think his best key point is that "If there is only one big AI model winner, then all market dynamics bets are likely going to zero for other players, but as long as there is more than one competitive model and ideally many, many vectors of competition will exist" (Paraphrasing). One can interpret this as Microsoft and other large players will likely, paradoxically, keep hoping for (and facilitating) many model players to prevent any one player from getting too far ahead.

- Society is betting on AI – and the outcomes aren't looking good (w/ Nate Soares). Risky Business with Nate Silver and Maria Konnikova is one of my favorite regular podcasts, and this week they have an AI episode with Nate Soares, who is one of the more vocal "AI Doomer" voices. It's an interesting episode and covers Nate's new book co-authored with Eliezer Yudkowsky: "If someone builds it, everybody dies.". The "it" being referred to being ASI (Artificial Super Intelligence). The book seems likely to become an anchor text for the argument against further fast AI research. I think it's important to consider such arguments (even though I think they are fundamentally incorrect in how long ASI will take to develop and in the nature of technology diffusion). The central (and useful) central argument is that there are three potential outcomes of the current push for ASI: 1 - it failes to achieve its goal, 2 - it achieves its goal but can be contained/managed and would be contained by a number of elite companies, 3 - it achieves its goal and cannot be contained, leading too (in many cases) the extinction of humanity or even life on Earth. The clear argument being that since none of these outcomes are actually desirable, should we not stop work immediately to determine how to mitigate risks? I think the downside scenarios should be in the calculus, but I also think there are many other outcomes not in this list of three. Specifically, the emergence of better and better AI over many 10s (and maybe 100s) of years, available to many people, providing great benefits to humanity and counteracting forces of destruction. One can certainly argue "why take the risk," but in many ways, the risk is being taken, and it is hard to imagine a world where there will not forever be groups of humans working on building better and better AI. As such, I still believe the best thing to do is to do as much of the work out in the open so that problems can be solved by everyone and control is not centralized.

- Disrupting the first reported AI-orchestrated cyber espionage campaign. As if to highlight some of the risks, Claude this week unveiled an episode where they detected highly sophisticated usage of Claude code for cyberattacks. Claude's code allows deep automation of tasks, including code generation, and can be run for many hours at a time without human intervention. This makes it perfect for long-running attacks that test defenses. While Claude is set up to reject obviously malicious attacks, the attackers effectively managed to jailbreak the system and use it anyway. Including by asking Claude to pretend to be a legitimate cybersecurity vendor and by breaking up tasks into very small pieces. No doubt Anthropic will try to improve guardrails to prevent the exact same attacks, but this will be hard to get right perfectly. Anthropic's long-term recommendation is ... unsurprisingly to use AI on the cyber defence side of the equation.

After this more apocalyptic episode than usual, wishing you a great weekend!